IMOS - Data Lake Best Practices

Home > Data Lake > Data Lake Best Practices

Requires the Data Lake module.

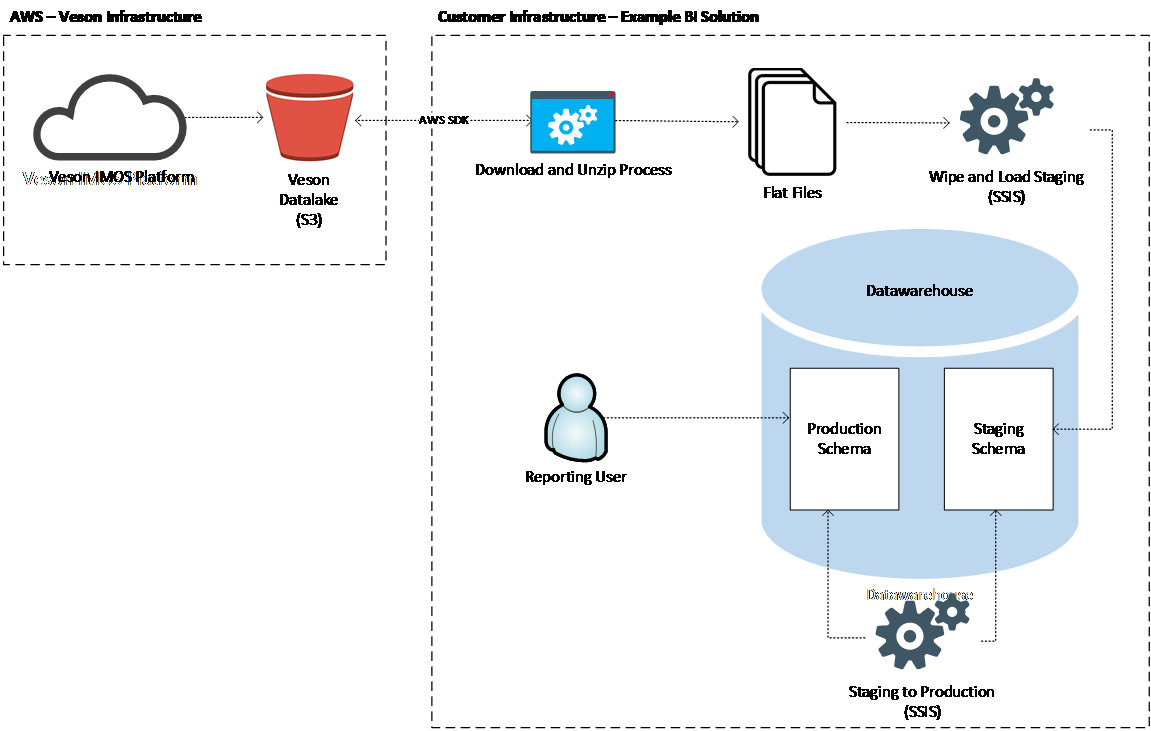

This article describes some best practices that clients may choose to adopt when interacting with the Veson IMOS Platform (IMOS) Data Lake. There are many ways to ingest and process data for reporting purposes; this article lays out one option that is widely utilized:

Technologies & Patterns

This article assumes familiarity with the following technologies and patterns:

AWS S3

The AWS SDK

The AWS CLI

Microsoft SQL Server

SQL Server Integration Services

Relational database design concepts

Architecture Diagram

Downloading Data

Data can be downloaded from the Data Lake on a regular basis using either a scripted call to the AWS CLI or by building a lightweight application that leverages the AWS SDK. Veson Nautical recommends running the download process at the same cadence as the Data Lake refresh interval. By default, the refresh interval is set to once per day. However, upon request, this can be adjusted to run as often as once per hour.

Configure the download process to pull down all required files into a folder that matches the folder name observed in S3 (e.g., 2020-03-24).

Programmatically unzip all of the downloaded zip files.

Programmatically delete the zip files to save space.

The result should be a folder that contains an uncompressed copy of the latest flat files, ready for ingestion. Veson Nautical recommends logging the output of the download process. It is also worthwhile to set up automated alerts for the scenario where downloads are interrupted or disk space problems occur.

Ingesting Data via ETL

Populate the Staging Tables

A recommended pattern for ingesting flat files into a data warehouse is to use Microsoft SSIS (SQL Server Integration Services) in conjunction with a staging schema and the "wipe and load" technique.

A staging schema is a set of tables that essentially mimic the structure of the flat files that are to be ingested. There should be one staging table for each file type.

The data in the staging tables are ephemeral; after ETL is complete, they will be empty. For that reason, reports or other data warehouse consumers should not be permitted to access the staging tables directly. Permissions can be applied at the schema level to implement this restriction.

Create an SSIS package that:

Wipes the staging tables via a TRUNCATE command

Loads each flat file into the appropriate staging table

Sends an alert if anything fails during the process

Update the Production Data Warehouse Tables

With the latest data now sitting in the staging tables, the penultimate step is to update the production tables in the data warehouse. There are several ways to do this; however, the goal here is to ensure that any reports being executed are not interrupted. Wipe and load cannot be used on production tables because that would lead to an inconsistent experience for people running reports.

Create an SSIS package that:

Iterates over the list of staging tables

For each staging table, executes an appropriate T-SQL statement to merge changes from the staging table to the production table

Pays attention to the absence of records in a staging table, which indicates records have been deleted since the last refresh

Other Tips

Consider where to place transactions in the process, to ensure reporting clients do not experience inconsistent reads across different entities.

Consider using snapshot isolation.

Monitor transaction log utilization for larger load operations.

Truncate the staging tables afterward, to avoid confusion.

Instrument the ETL process with appropriate logging and alerting.

Centralize any transformation logic in the Staging ?? Production step. It is generally easier to maintain if the staging tables match the flat files precisely.

Local Clean Up

After the flat files have been successfully ingested, be sure to programmatically clean up the download folder structure on your local file system. Veson Nautical recommends implementing a sliding window delete mechanism so that you have n days' worth of data on your local system for diagnostics purposes.